Workload management

TrinityX is using the OpenHPC for user space, including SLURM.

SLURM (OpenHPC)

TrinityX is configured to use the default paths for SLURM. The configuration directory /etc/slurm is shared on all the nodes but links to /trinity/shared/etc/slurm where all the files reside.

For better readability, the files have been split up and included from the main slurm.conf file.

| File | Description |

|---|---|

| slurm.conf | Main configuration file |

| acct_gather.conf | Slurm configuration file for the acct_gather plugins (see acct_gather.conf) |

| slurm-health.conf | Health check configuration (where applicable) |

| slurm-partitions.conf | Partition configuration |

| topology.conf | Slurm configuration file for defining the network topology (see topology.conf) |

| cgroup.conf | Slurm configuration file for the cgroup support (see cgroup.conf) |

| slurmdbd.conf | Slurm Database Daemon (SlurmDBD) configuration file ([see slurmdbd.conf(https://slurm.schedmd.com/slurmdbd.conf.html)]) |

| slurm-nodes.conf | Slurm node configuration file |

| slurm-user.conf | Slurm user configuration file (e.g. QoS, priorities) |

By default Luna generates the configuration for nodes and partitions, where the partition is based on the group name. This method is useful for homogeneous node-type cluster where a default node contains the detailed configuration for CPU-s, cores and RAM. When more complexity is desired, e.g. having different node-types, the automation can be overidden by manually configuration in these files, or the graphical slurm configurator can be used.

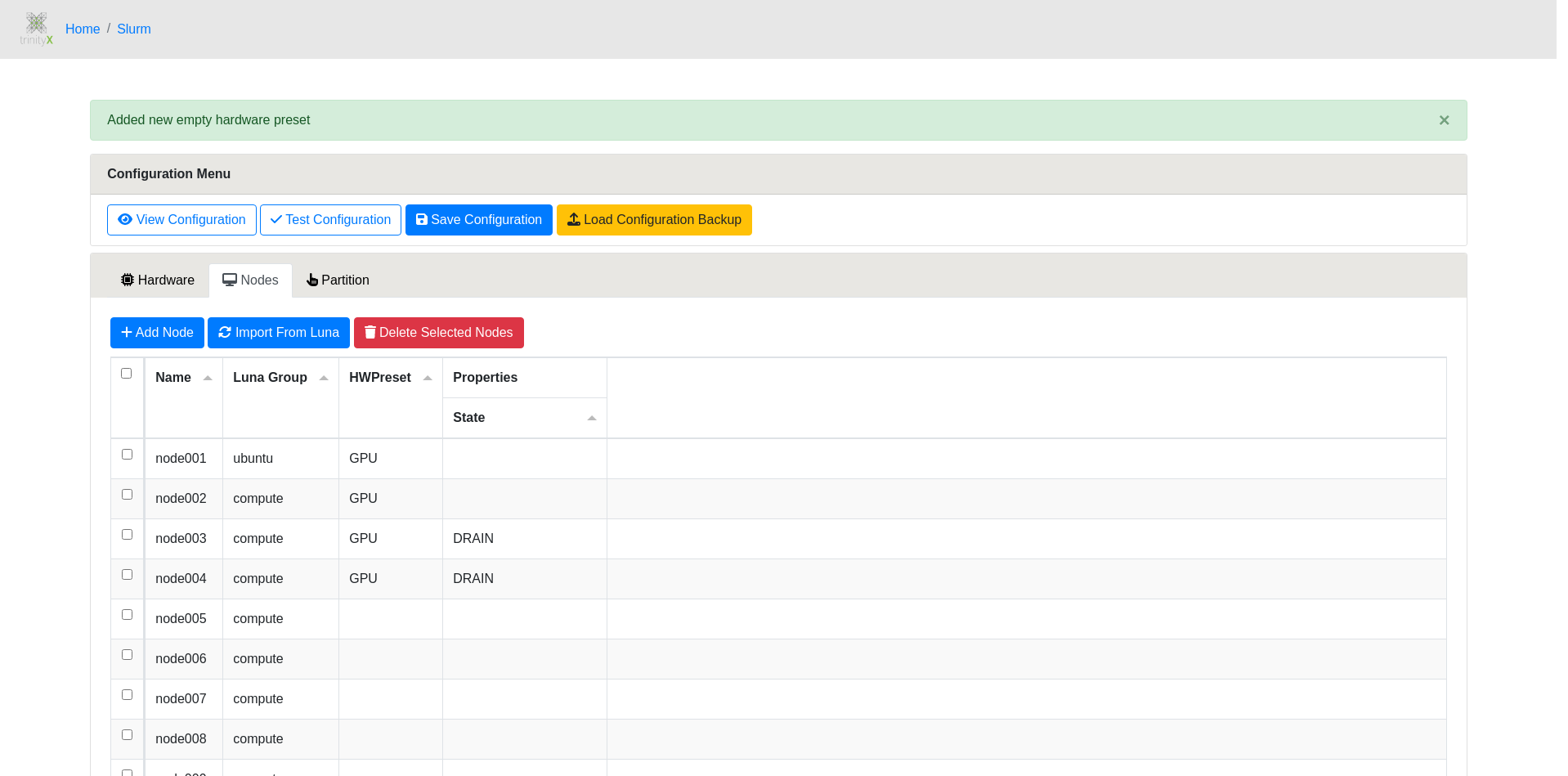

Graphical slurm configuration application

The graphical slurm configurator in action:

Automation versus Configuration

The order of configuration is as follows:

- luna configures slurm. this is an automated process

- more advanced configuration done through the Slurm Graphical configurator

- custom configuration

Luna configures

Luna configures the nodes and groups based on luna config by default. It adheres the block:

# TrinityX will only manage inside 'TrinityX Managed block'.

# If manual override is desired, the 'TrinityX Managed block' can be removed.

#### TrinityX Managed block start ####

NodeName=node003 # GroupName=compute

NodeName=node002 # GroupName=compute

NodeName=node001 # GroupName=compute

NodeName=node004 # GroupName=compute

#### TrinityX Managed block end ####

There are no automated changes outside the clearly listed managed blocks. This would allow for some additional configuration if the administrator sees the need for it.

Note: When luna automation is desired, it is however important to configure the line:

NodeName=DEFAULT Boards=1 SocketsPerBoard=1 CoresPerSocket=1 ThreadsPerCore=1 RealMemory=100 State=UNKNOWN

where the config should match with the default node hardware configuration.

Slurm Graphical configurator

When using the Slurm Graphical configurator, it'll ask you to confirm using this approach from now on. This typically happens only once after which the managed blocks will be changed to show that the configuration is no longer managed by luna.

The same approach remains as such that no changes are made outside the listed blocks.

Custom configuration

removing the managed blocks altogether will allow for a complete custom configuration. In this case TrinityX relies on the administrator to configure slurm.